man 8 diskinfo

I got some feedback to my previous post about benchmarking of bhyve and VirtualBox:

@rbogorodskiy Try the e1000 with vbox for networking, and a raw device for the i/o test. For bhyve, virtio-blk is best.

— bhyve dev (@bhyve_dev) October 17, 2016

So I decided to do some more tests and include e1000 for networking tests and try different drivers and image types for I/O tests.

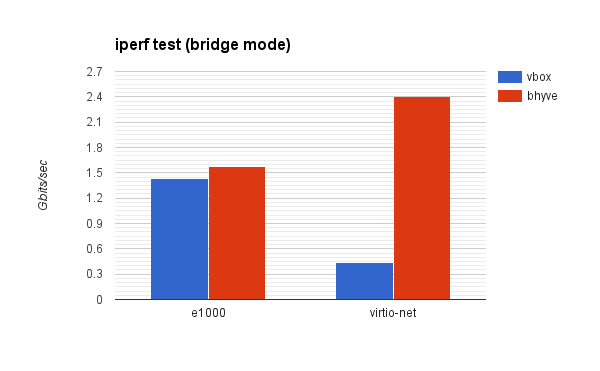

Networking test

This is a little more extensive test than the one in my previous blog post, now it includes e1000 and virtio-net for both VirtualBox and bhyve. Setting for the test is still the same: iperf and bridged network mode. Commands remain the same, on VM side I run:

iperf -s

On the host, I run:

iperf -c $vmip

And calculate the average of 8 runs. Results:

And actual values (in Gbits/sec) are:

| VirtualBox | bhyve | |

|---|---|---|

| e1000 | 1.42875 | 1.57375 |

| virtio-net | 0.43275 | 2.4 |

The most shocking part here is that in VirtualBox e1000 is more than 3x times faster than virtio-net. This seems a little strange, esp. considering that e1000 performance in bhyve is almost the same, but virtio-net in bhyve is approx. 1.5x times faster than e1000 (that's probably a very huge difference too, but at least it's expected virtio to be faster).

I/O testing

I decided to check things suggested in the tweet above and started with disk configuration. I've converted my image to the "fixed size" type image like this:

VBoxManage clonehd uefi_fbsd_20gigs.vdi uefi_fbsd_20gigs_fixed.vdi --variant Fixed

Then I conducted tests for the following configurations:

- VirtualBox + fixed size image

- VirtualBox + dynamic size image

- bhyve + virtio-blk

- bhyve + ahci-hd

The same image was used for all tests. Fixed size image was produced using the command specified above, raw image for bhyve was created from the VirtualBox image using the qemu-img(1) tool.

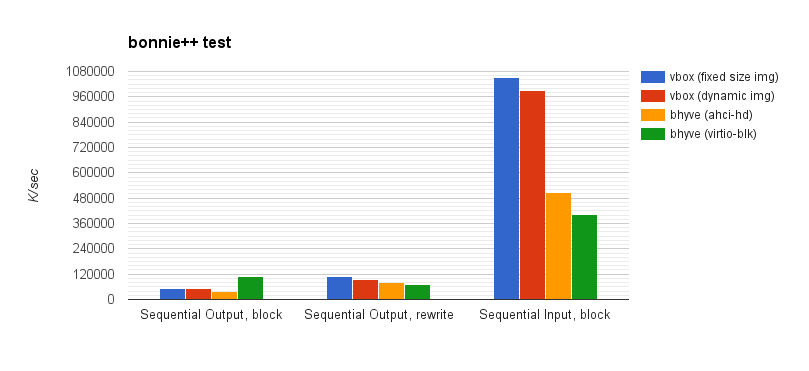

I started with bonnie++ test at results surprised me, to put it softly:

Here, we can see that bhyve with virtio-blk shows the best write speed (that is expected), but then shows the worst rewrite speed (which is a little surprising, but the gap is minimal) and shows the worst read speed (more than 2 times slower than VirtualBox; extremely surprising). After that I decided to take a few days break and then to try some different ways of benchmarking.

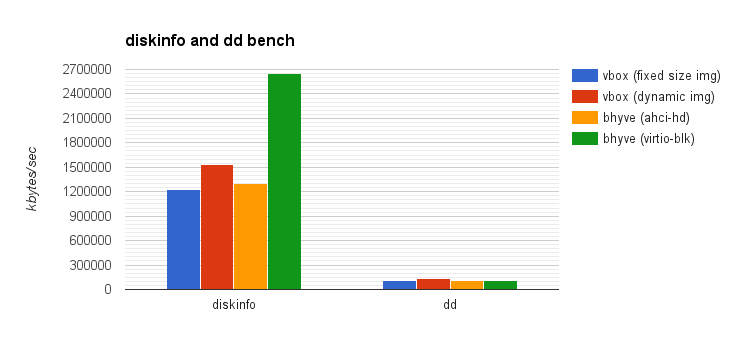

So, for read performance I used the diskinfo(8) tool this way:

diskinfo -tv ada0 | grep middle

and use the average of 16 runs. For writing, I used dd(1):

dd bs=1M count=2048 if=/dev/zero of=test conv=sync; sync; rm test

and also use the average of 16 runs. I got the following results:

And the numbers (all values are kbytes/sec):

| vbox (fixed size img) | vbox (dynamic img) | bhyve (ahci-hd) | bhyve (virtio-blk) | |

|---|---|---|---|---|

| diskinfo | 1232397 | 1528779 | 1296055 | 2647685 |

| dd | 113737 | 135088 | 113889 | 115924 |

Frankly, this didn't help to figure out state of things, because these results are kind of opposite to what bonnie++ showed: bhyve with virtio-blk shows very high read speed as demonstrated by diskinfo, 1.7x faster than VirtualBox. On the other hand, the numbers that diskinfo is showing are crazy: 2647 mbytes/sec. This feels more like RAM transfer rates, so it looks like some sort of caching is involved here.

As for the dd(1) test part, bhyve with virtio-blk is 16.5% slower than VirtualBox using dynmanic size image.

Conclusion

- For VirtualBox, if choosing between e1000 and virtio-net, e1000 definitely provides better performance over virtio-net, at least on FreeBSD hosts with FreeBSD guests

- virtio-net in bhyve is approx. 1.5x faster than VirtualBox with e1000

- I'll refrain from comments on I/O tests.

Thanks. Interesting to me as I am just getting started with virtualization on FreeBSD.

ReplyDeleteHi,

ReplyDeleteI'm using VirtualBox for years on FreeBSD as a host and mainly Linux as guest. Some time ago I was forced to use Linux geust on FreeBSD with bhyve host (I will write more below). And recently I've used also VirtualBox guest in Windows host. I didn't make such many test as you, but I've tested mainly network performance and also ... see below :) And I have some remarks...

1) Network: Your resulst are interesing for me.

What you meam writing e1000? Intel PRO/1000 adapter? The maximum performance I have noticed with this interface is about 500Mbit/s - always bridged with physical 1Gbit/s card and tested from external (physically) machine.

With virtio I've noticed 940Mbit/s (full speed of Gigabit interface) in: Ubuntu (16, 18) guest under bhyve FreeBSD host, FreeBSD (12) guest under VirtualBox (6) Windows host. But I have problems with network stability of FBSD guest under Winodws with virtio-net - after few manual restarts of FBSD I've changed to slower, but I hope stable PRO/1000.

2) Disk: As I read the best performance of guest is block device under ZFS (a little work with permissions is required).

You have to make something like: "zfs create -V20G -o volmode=dev zdata/test_disk", then you can use under bhyve /dev/zvol/zdata/test_disk. The same disk can bu used in VirtualBox in VMDK format made by: "VBoxManage internalcommands createrawvmdk -filename test_disk.vmdk -rawdisk /dev/zvol/zdata/test_disk"

3) When using FreeBSD as a host and you need "big" machine, then you have to use bhyve.

I requred to make 16 cores and 32GB RAM Ubuntu machine under FreeBSD host. The practical limit in VirtualBox was 8 cores. With 10 cores system was unstable. With more ... I had problems with starting.

Under bhyve I've successfully run 16 cores (official limit in bhyve) and 32GB RAM machines which works stable since few months.

And about network: I've just run virtio-net under Ubuntu virtual machine and I have stable 1Gbit/s link. Under VBox I had to tune em driver (I didn't noticed virtio-net possibility...) to see stable link under heavy load. And as I mentioned before: about 500Mbit/s max :(

Regards,

Martin