I've been asked a couple of times how to setup OpenBSD guest with libvirt/bhyve. It's fairly straight-forward, but minimal libvirt version required for that is 3.5.0 because it's the first version that allows to specify vgaconf settings.

First, download the installation image: https://ftp.openbsd.org/pub/OpenBSD/6.1/amd64/install61.fs and create a disk image:

truncate -s 2G openbsd61.rawCreate an initial XML (pay attention to the vgaconf='off' bit):

Now with virsh do:

virsh # define /path/to/openbsd61.xml

Domain openbsd61 defined from /path/to/openbsd61.xml

virsh # start openbsd61

Domain openbsd61 started

virsh # vncdisplay openbsd61

127.0.0.1:0

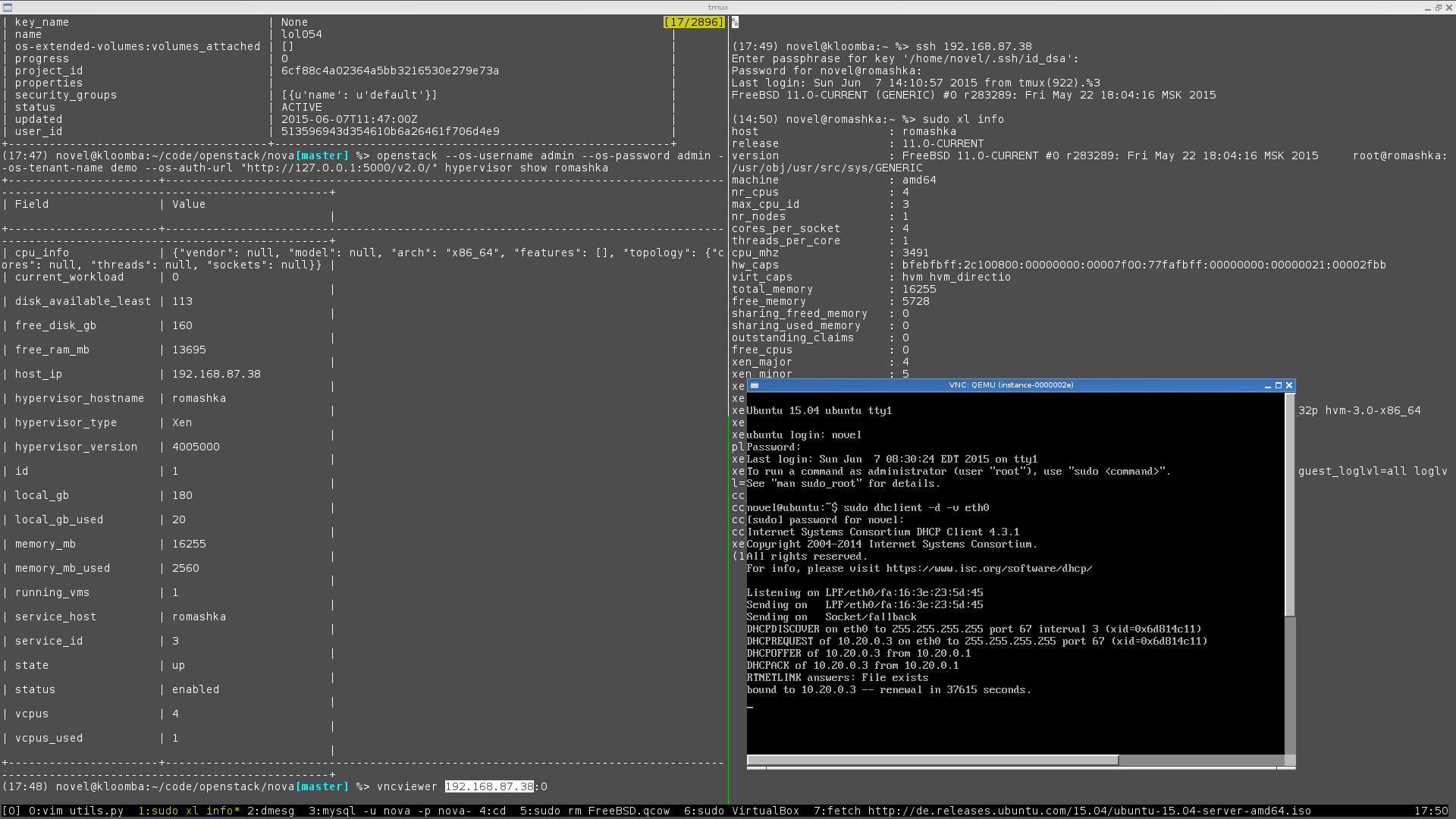

Using VNC client connect to the guest at the specified port:

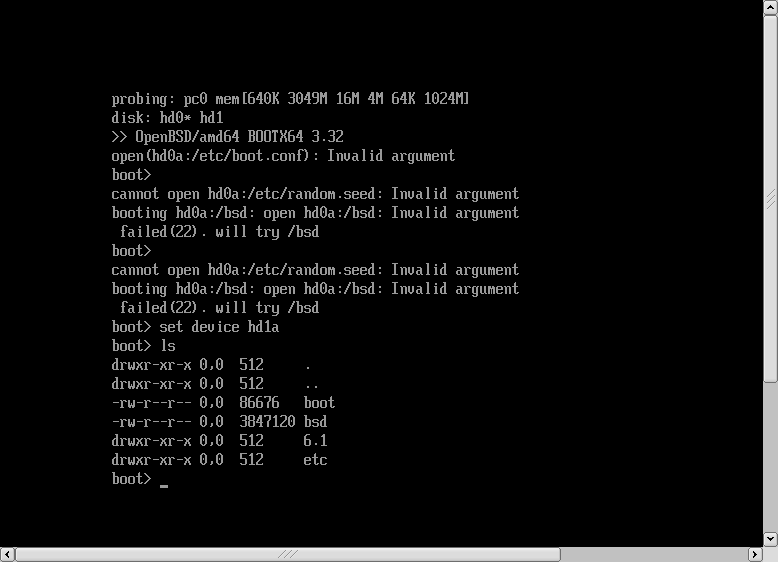

vncviewer :0This gives a boot loader prompt. For some reason, it doesn't set root device properly, so I have to do:

set device hd1a

boot

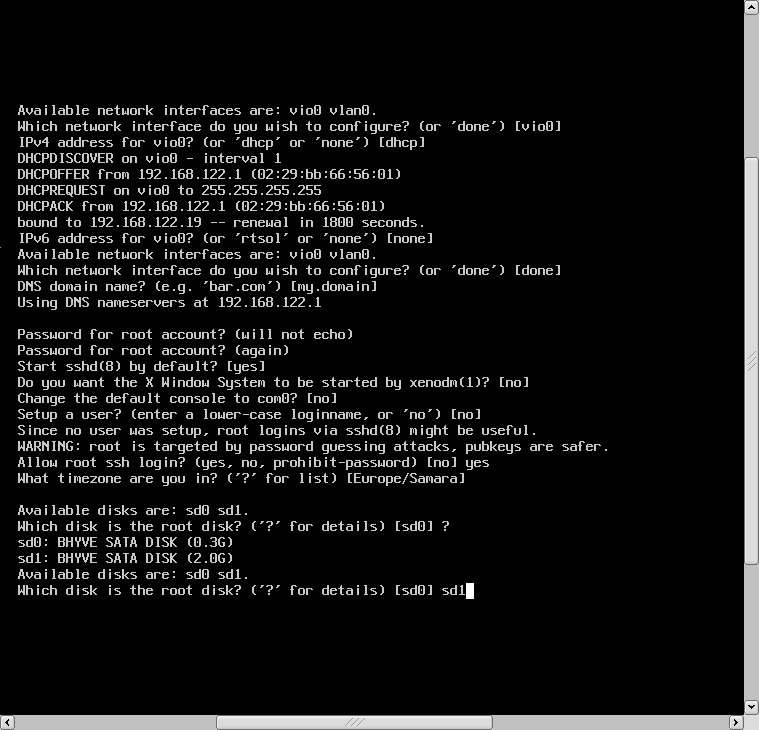

Then there'll be a number of installer questions (where I usually accept defaults) until it asks what disk should be used as a root device. It's important to check this carefully and choose the right one (you can find it by its size for example), otherwise it might just try to override the install image.

When the installation finishes, shutdown the guest, go back to virsh and edit the domain xml:

virsh # edit openbsd61And remove the disk block corresponding to install61.fs. And then start domain again:

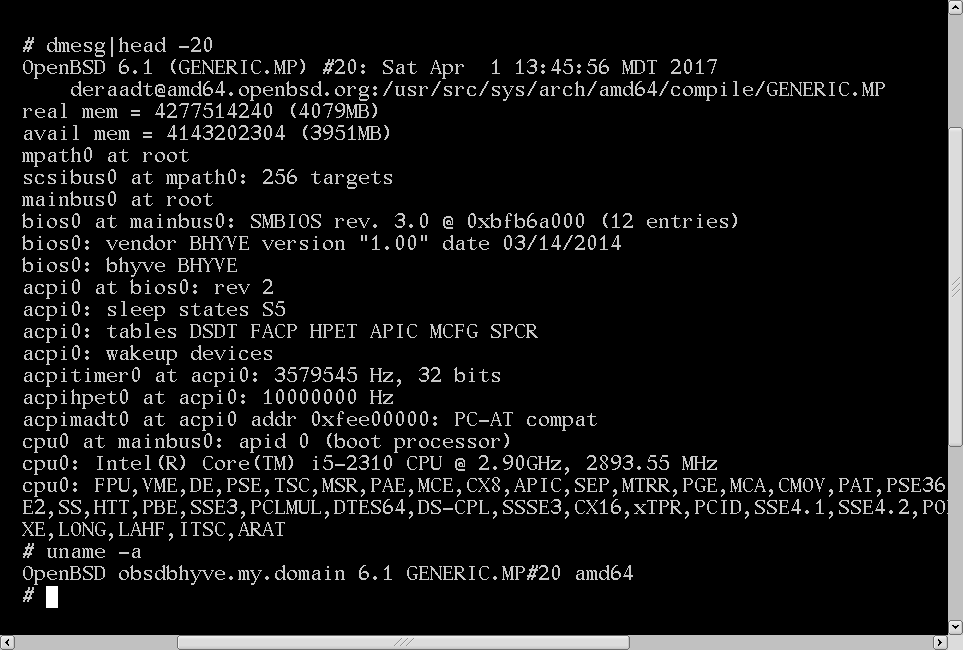

virsh # start openbsd61

Domain openbsd61 started Now you should be able to connect to the guest via VNC or via SSH.

PS My sample xml uses the autoport feature that will be available only in libvirt 3.7.0. If you're using an earlier version, specify the VNC port explicitly:

<graphics type='vnc' port='5904'>